- Размер: 241.5 Кб

- Количество слайдов: 21

There

exist several methods of the analysis of meaning:

a)

Definitional

method

is the study of dictionary definitions of words. In this analysis the

data from various dictionaries are analysed comparatively, e.g.

b)

Transformational

analysis implies that the dictionary definitions are subjected to

transformational operations, e.g. the word dull (adj) is defined as

-‘uninteresting’

→ we transform it into ‘deficient in interest or excitement’

—

‘stupid’→ ‘deficient in intellect’

—

‘not active’ → ‘deficient in activity’, etc.

In

this way the semantic components of the analysed words are singled

out. In the analysed examples we have singled out just denotative

components, there are no connotative components in them.

c)

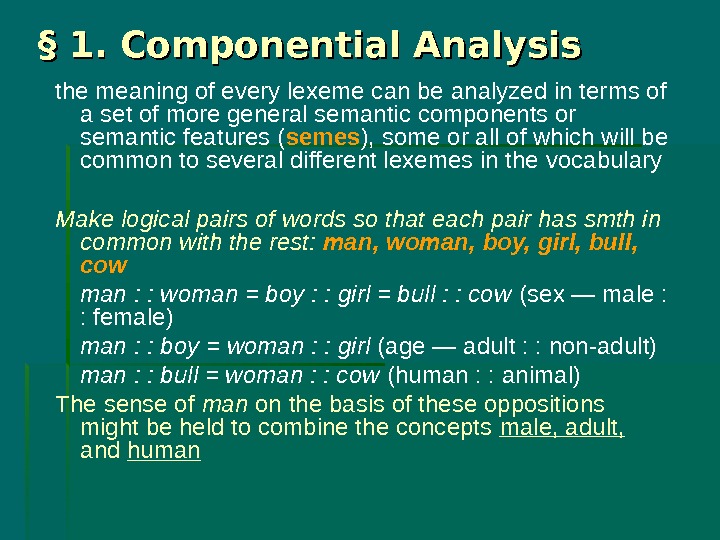

Componential

analysis

– is the distinguishing of semantic components of the analysed word

– denotation and connotation (if any). In the process of this

analysis the meaning of a word is defined as a set of elements of

meaning which are not part of the vocabulary of the language itself,

but rather theoretical elements. These theoretical elements are

necessary to describe the semantic relations between the lexical

elements of a given language.

d)

The semantic structure of a word is also studied through the word’s

linear relationships with other words, i.e. through its

combinability, collocability or in other words – distribution.

Using this method words are replaced by conventional word-class

symbols: N – noun, V – verb, A – adjective, prepositions and

conjunctions are not coded.

Thus,

this distributional

analysis or the analysis of combinability, collocability studies

semantics of a word through its occurrence with other words.

c)

Close to the previous method is contextual

analysis. It states that difference in meaning of linguistic units is

always indicated by a difference in environment. It studies

interaction of a polysemantic word with syntactic and lexical

environment. Context is divided into lexical, syntactical and mixed.

As

a rule indication on the meaning comes from syntactic, lexical and

morphological factors combined. The contextual method makes the study

of details and specific features more exact.

6

Соседние файлы в предмете [НЕСОРТИРОВАННОЕ]

- #

- #

- #

- #

- #

- #

- #

- #

- #

- #

- #

From Wikipedia, the free encyclopedia

In linguistics, semantic analysis is the process of relating syntactic structures, from the levels of phrases, clauses, sentences and paragraphs to the level of the writing as a whole, to their language-independent meanings. It also involves removing features specific to particular linguistic and cultural contexts, to the extent that such a project is possible. The elements of idiom and figurative speech, being cultural, are often also converted into relatively invariant meanings in semantic analysis. Semantics, although related to pragmatics, is distinct in that the former deals with word or sentence choice in any given context, while pragmatics considers the unique or particular meaning derived from context or tone. To reiterate in different terms, semantics is about universally coded meaning, and pragmatics, the meaning encoded in words that is then interpreted by an audience.[1]

Semantic analysis can begin with the relationship between individual words. This requires an understanding of lexical hierarchy, including hyponymy and hypernymy, meronomy, polysemy, synonyms, antonyms, and homonyms.[2] It also relates to concepts like connotation (semiotics) and collocation, which is the particular combination of words that can be or frequently are surrounding a single word. This can include idioms, metaphor, and simile, like, «white as a ghost.»

With the availability of enough material to analyze, semantic analysis can be used to catalog and trace the style of writing of specific authors.[3]

See also[edit]

- Lexical analysis

- Discourse analysis

- Semantic analysis (machine learning)

- Literal and figurative language

- Translation

- Semantic structure analysis

- Sememe

References[edit]

- ^ Goddard, Cliff (2013). Semantic Analysis: An Introduction (2nd ed.). New York: Oxford University Press. p. 17.

- ^ Manning, Christopher; Scheutze, Hinrich (1999). Foundations of Statistical Natural Language Processing. Cambridge: MIT Press. p. 110. ISBN 9780262133609.

- ^ Miranda-Garcıa, Antonio; Calle-Martın, Javier (May 2012). «The Authorship of the Disputed Federalist Papers with an Annotated Corpus». English Studies. 93 (3): 371–390. doi:10.1080/0013838x.2012.668795. S2CID 162248379.

The purpose of semantic analysis is to draw exact meaning, or you can say dictionary meaning from the text. The work of semantic analyzer is to check the text for meaningfulness.

We already know that lexical analysis also deals with the meaning of the words, then how is semantic analysis different from lexical analysis? Lexical analysis is based on smaller token but on the other side semantic analysis focuses on larger chunks. That is why semantic analysis can be divided into the following two parts −

Studying meaning of individual word

It is the first part of the semantic analysis in which the study of the meaning of individual words is performed. This part is called lexical semantics.

Studying the combination of individual words

In the second part, the individual words will be combined to provide meaning in sentences.

The most important task of semantic analysis is to get the proper meaning of the sentence. For example, analyze the sentence “Ram is great.” In this sentence, the speaker is talking either about Lord Ram or about a person whose name is Ram. That is why the job, to get the proper meaning of the sentence, of semantic analyzer is important.

Elements of Semantic Analysis

Followings are some important elements of semantic analysis −

Hyponymy

It may be defined as the relationship between a generic term and instances of that generic term. Here the generic term is called hypernym and its instances are called hyponyms. For example, the word color is hypernym and the color blue, yellow etc. are hyponyms.

Homonymy

It may be defined as the words having same spelling or same form but having different and unrelated meaning. For example, the word “Bat” is a homonymy word because bat can be an implement to hit a ball or bat is a nocturnal flying mammal also.

Polysemy

Polysemy is a Greek word, which means “many signs”. It is a word or phrase with different but related sense. In other words, we can say that polysemy has the same spelling but different and related meaning. For example, the word “bank” is a polysemy word having the following meanings −

-

A financial institution.

-

The building in which such an institution is located.

-

A synonym for “to rely on”.

Difference between Polysemy and Homonymy

Both polysemy and homonymy words have the same syntax or spelling. The main difference between them is that in polysemy, the meanings of the words are related but in homonymy, the meanings of the words are not related. For example, if we talk about the same word “Bank”, we can write the meaning ‘a financial institution’ or ‘a river bank’. In that case it would be the example of homonym because the meanings are unrelated to each other.

Synonymy

It is the relation between two lexical items having different forms but expressing the same or a close meaning. Examples are ‘author/writer’, ‘fate/destiny’.

Antonymy

It is the relation between two lexical items having symmetry between their semantic components relative to an axis. The scope of antonymy is as follows −

-

Application of property or not − Example is ‘life/death’, ‘certitude/incertitude’

-

Application of scalable property − Example is ‘rich/poor’, ‘hot/cold’

-

Application of a usage − Example is ‘father/son’, ‘moon/sun’.

Meaning Representation

Semantic analysis creates a representation of the meaning of a sentence. But before getting into the concept and approaches related to meaning representation, we need to understand the building blocks of semantic system.

Building Blocks of Semantic System

In word representation or representation of the meaning of the words, the following building blocks play an important role −

-

Entities − It represents the individual such as a particular person, location etc. For example, Haryana. India, Ram all are entities.

-

Concepts − It represents the general category of the individuals such as a person, city, etc.

-

Relations − It represents the relationship between entities and concept. For example, Ram is a person.

-

Predicates − It represents the verb structures. For example, semantic roles and case grammar are the examples of predicates.

Now, we can understand that meaning representation shows how to put together the building blocks of semantic systems. In other words, it shows how to put together entities, concepts, relation and predicates to describe a situation. It also enables the reasoning about the semantic world.

Approaches to Meaning Representations

Semantic analysis uses the following approaches for the representation of meaning −

-

First order predicate logic (FOPL)

-

Semantic Nets

-

Frames

-

Conceptual dependency (CD)

-

Rule-based architecture

-

Case Grammar

-

Conceptual Graphs

Need of Meaning Representations

A question that arises here is why do we need meaning representation? Followings are the reasons for the same −

Linking of linguistic elements to non-linguistic elements

The very first reason is that with the help of meaning representation the linking of linguistic elements to the non-linguistic elements can be done.

Representing variety at lexical level

With the help of meaning representation, unambiguous, canonical forms can be represented at the lexical level.

Can be used for reasoning

Meaning representation can be used to reason for verifying what is true in the world as well as to infer the knowledge from the semantic representation.

Lexical Semantics

The first part of semantic analysis, studying the meaning of individual words is called lexical semantics. It includes words, sub-words, affixes (sub-units), compound words and phrases also. All the words, sub-words, etc. are collectively called lexical items. In other words, we can say that lexical semantics is the relationship between lexical items, meaning of sentences and syntax of sentence.

Following are the steps involved in lexical semantics −

-

Classification of lexical items like words, sub-words, affixes, etc. is performed in lexical semantics.

-

Decomposition of lexical items like words, sub-words, affixes, etc. is performed in lexical semantics.

-

Differences as well as similarities between various lexical semantic structures is also analyzed.

Semantic Analysis

Humans interact with each other through speech and text, and this is called Natural language. Computers understand the natural language of humans through Natural Language Processing (NLP).

NLP is a process of manipulating the speech of text by humans through Artificial Intelligence so that computers can understand them. It has made interaction between humans and computers very easy.

(Recommended read : Top 10 Applications of NLP)

Human language has many meanings beyond the literal meaning of the words. There are many words that have different meanings, or any sentence can have different tones like emotional or sarcastic. It is very hard for computers to interpret the meaning of those sentences.

Semantic analysis is a subfield of NLP and Machine learning that helps in understanding the context of any text and understanding the emotions that might be depicted in the sentence. This helps in extracting important information from achieving human level accuracy from the computers. Semantic analysis is used in tools like machine translations, chatbots, search engines and text analytics.

In this blog, you will learn about the working and techniques of Semantic Analysis.

How does Semantic Analysis work?

According to this source, Lexical analysis is an important part of semantic analysis. Lexical semantics is the study of the meaning of any word. In semantic analysis, the relation between lexical items are identified. Some of the relations are hyponyms, synonyms, Antonyms, Homonyms etc.

Let us learn in details about the relations:

-

Hyponymy: It illustrates the connection between a generic word and its occurrences. The generic term is known as hypernym, while the occurrences are known as hyponyms.

-

Homonymy: It may be described as words with the same spelling or form but diverse and unconnected meanings.

-

Polysemy: Polysemy is a term or phrase that has a different but comparable meaning. To put it another way, polysemy has the same spelling but various and related meanings.

-

Synonymy: It denotes the relationship between two lexical elements that have different forms but express the same or a similar meaning.

-

Antonymy: It is the relationship between two lexical items that include semantic components that are symmetric with respect to an axis.

-

Meronomy: It is described as a logical arrangement of letters and words indicating a component portion of or member of anything.

Through identifying these relations and taking into account different symbols and punctuations, the machine is able to identify the context of any sentence or paragraph.

(Read also: What is text mining?)

Meaning Representation:

Semantic analysis represents the meaning of any sentence. These are done by different processes and methods. Let us discuss some building blocks of the semantic system:

-

Entities: Any sentence is made of different entities that are related to each other. It represents any individual category such as name, place, position, etc. We will discuss in detail about entities and their correlation later in this blog.

-

Concepts: It represents the general category of individual, such as person, city etc.

-

Relations: It represents the relation between different entities and concepts in a sentence.

-

Predicates: It represents the verb structure of any sentence.

There are different approaches to Meaning Representations according, some of them are mentioned below:

-

First-order predicate logic (FOPL)

-

Frames

-

Semantic Nets

-

Case Grammar

-

Rule-based architecture

-

Conceptual graphs

-

Conceptual dependency (CD)

(Related blog: Sentiment Analysis of YouTube Comments)

Meaning Representation is very important in Semantic Analysis because:

-

It helps in linking the linguistic elements of a sentence to the non-linguistic elements.

-

It helps in representing unambiguous data at lexical level.

-

It helps in reasoning and verifying correct data.

Processes of Semantic Analysis:

The following are some of the processes of Semantic Analysis:

-

Word Sense disambiguation:

It is an automatic process of identifying the context of any word, in which it is used in the sentence. In natural language, one word can have many meanings. For eg- The word ‘light’ could be meant as not very dark or not very heavy. The computer has to understand the entire sentence and pick up the meaning that fits the best. This is done by word sense disambiguation.

-

Relationship Extraction:

In a sentence, there are a few entities that are co-related to each other. Relationship extraction is the process of extracting the semantic relationship between these entities. In a sentence, “I am learning mathematics”, there are two entities, ‘I’ and ‘mathematics’ and the relation between them is understood by the word ‘learn’.

(Also read: NLP library with Python)

Techniques of Semantic Analysis:

There are two types of techniques in Semantic Analysis depending upon the type of information that you might want to extract from the given data. These are semantic classifiers and semantic extractors. Let us briefly discuss them.

-

Semantic Classification models:

These are the text classification models that assign any predefined categories to the given text.

-

Topic classification:

It is a method for processing any text and sorting them according to different known predefined categories on the basis of its content.

For eg: In any delivery company, the automated process can separate the customer service problems like ‘payment issues’ or ‘delivery problems’, with the help of machine learning. This will help the team notice the issues faster and solve them.

(Related read: Text cleaning and processing in NLP)

-

Sentiment analysis:

It is a method for detecting the hidden sentiment inside a text, may it be positive, negative or neural. This method helps in understanding the urgency of any statement. In social media, often customers reveal their opinion about any concerned company.

For example, someone might comment saying, “The customer service of this company is a joke!”. If the sentiment here is not properly analysed, the machine might consider the word “joke” as a positive word.

Latent Semantic Analysis: It is a method for extracting and expressing the contextual-usage meaning of words using statistical calculations on a huge corpus of text. LSA is an information retrieval approach that examines and finds patterns in unstructured text collections as well as their relationships.

-

Intent classification:

It is a method of differentiating any text on the basis of the intent of your customers. The customers might be interested or disinterested in your company or services. Knowing prior whether someone is interested or not helps in proactively reaching out to your real customer base.

-

Semantic Extraction Models:

-

Keyword Extraction:

It is a method of extracting the relevant words and expressions in any text to find out the granular insights. It is mostly used along with the different classification models. It is used to analyze different keywords in a corpus of text and detect which words are ‘negative’ and which words are ‘positive’. The topics or words mentioned the most could give insights of the intent of the text.

-

Entity extraction:

As mentioned earlier in this blog, any sentence or phrase is made up of different entities like names of people, places, companies, positions, etc. This method is used to identify those entities and extract them.

It can be very useful for customer service teams of businesses like delivery companies as the machine can automatically extract the names of their customers, their location, shipping numbers, contact information or any other relevant or important data.

(Recommended read: Word embedding in NLP using python code)

Conclusion

In any customer centric business, it is very important for the companies to learn about their customers and gather insights of the customer feedback, for improvement and providing better user experience.

With the help of machine learning models and semantic analysis, machines can easily extract meaning from unstructured data gathered from their customer base in real time. It helps the company get accurate feedback that drives better decision-making and as a result improves the customer base.